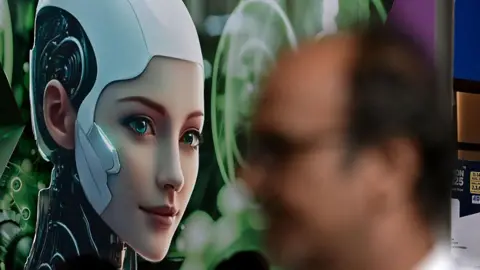

Researchers warn YouTube is pushing AI-generated political fakes.

Digital-forensics teams monitoring global elections say YouTube’s algorithm is surfacing and amplifying deepfake political videos, sometimes reaching millions before they are flagged. Analysts told Reuters the platform is being overwhelmed by the speed and sophistication of new AI-generated content.

Ben Colman, CEO of Reality Defender, said:

“It’s very difficult for platforms to catch everything. The speed at which AI content is being created is outpacing the guardrails.”

The warnings come as more than 40 countries head into major election cycles.

YouTube enforces policies — but detection lags behind.

YouTube says it removes manipulated election content or adds labels when appropriate. A spokesperson told Reuters:

“We remove content that aims to mislead viewers about elections, and we apply labels to synthetic or altered content when appropriate.”

But researchers say enforcement is inconsistent, and deepfakes often spread faster than YouTube’s response.

Sam Gregory of WITNESS warned:

“We’re entering an era when people can’t tell what’s real — and platforms aren’t ready for that scale of confusion.”

Experts raise concerns about selective takedowns.

Analysts tracking the videos observed that some deepfakes were removed instantly, while others — including those targeting vulnerable regions — stayed online for days. The inconsistency has fueled concerns about potential political or geographic bias in what the platform removes.

Colman said the uneven pattern makes it impossible to understand how moderation decisions are made:

“It’s hard to know what’s getting flagged, why, and when. The lack of transparency creates room for perceived bias.”

Digital-rights groups told Reuters that selective enforcement can shape voter perception, especially when misleading videos in certain countries remain online long enough to influence early narratives.

YouTube denies political favoritism but did not explain how regional teams differ in enforcement methods.

Governments demand clarity as election season approaches.

European regulators have already requested detailed information from YouTube on how political content is ranked and what measures are being taken to stop synthetic media during elections. Officials warn the platform could become a vector for coordinated misinformation campaigns if detection systems fail.

As one analyst told Reuters:

“This isn’t the future — this is already happening, and it’s accelerating.”

A critical test for the world’s largest video platform.

With election misinformation moving at AI speed, researchers say YouTube must close the enforcement gap quickly — or risk becoming a global amplifier for synthetic political deception.